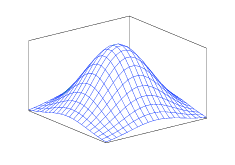

Many times in artificial intelligence we deal with large data sets of a large number of features or variables and there is not a clear way to visualize or interpret the relationships between these variables. Principle components analysis (PCA) is a technique for finding a basis set that may represent the data well under some circumstances. PCA finds a set of orthogonal basis vectors which are aligned in the directions of maximum variance, the so called principle components. PCA also ranks the components by importance (i.e. variance).

Principle components analysis assumes that the distributions of the random variables are characterizes by their mean and variance, exponential distributions such as the Gaussian distribution for example. PCA also makes the assumption that the direction of maximum variance is indeed the most “important”. This assumption implies that the data has a high signal-to-noise ratio; the variance added by any noise is small in comparison to the variance from the important dynamics of the system.

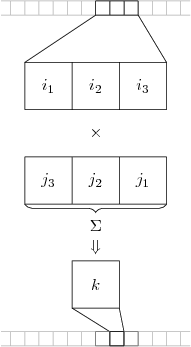

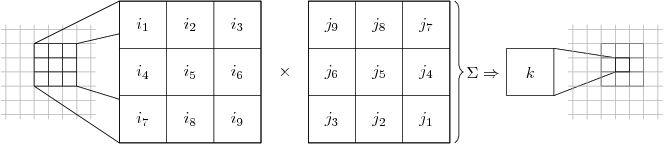

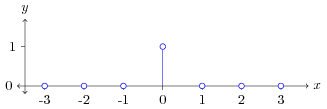

The principal components are calculated by finding the eigenvectors and eigenvalues of the covariance matrix. The covariance matrix is a square matrix which describes the covariance between random variables, where is the covariance between the th and th random variables. The random variables must be in mean deviation form, meaning that the mean has been subtracted so the distribution of the random variable has . The eigenvalues and eigenvectors are usually arranged such that is a diagonal matrix where the value of is the th eignenvalue , and is a matrix where the th column of is the th eigenvector. Note that the covariance matrix may have eigenvalues/eigenvectors, so and are matrices as well. At this point the eigenvectors can be ranked by importance by sorting the eigenvalues in . Since each eigenvector is associated with a particular eigenvalue, the columns of must be rearranged so the th column corresponds to the th eigenvalue.

If the goal is to reduce the dimensionality of the data set (the number of random variables), the principle components which are least important may be discarded. This leaves us with two matrices, where is the number of principal components to keep.

This matrix of eigenvectors represents a linear transform which orients the basis vectors in the directions of maximal variance, while maintaining orthogonality.