This article will deal with sampling and reconstructing signals. Introductory information to signal processing can be found here, and background on Fourier Transforms can be found here. Sampling a continuous-time signal to a discrete-time signal involves measuring a series of data points on a continuous waveform. Ideally the discrete-time signal should contain all of the information of the continuous-time signal. If the sampling period is too small, it is possible to lose information when sampling. The act of sampling a continuous-time signal can be modeled by multiplying the signal by an impulse train, or Dirac comb. Similar to the discrete-time Kronecker delta, the Dirac delta function represents an impulse as a continuous function:

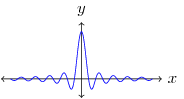

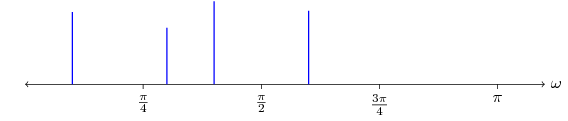

The impulse train is formed by taking a sum of shifted Dirac deltas, . Multiplying the signal with the impulse train gives a continuous-time signal with scaled impulses at frequency , called an ideal sampler, . The frequency domain representation of an impulse train is also an impulse train, with impulses at . In the frequency domain this corresponds to convolving the ideal sampler with the frequency representation of the signal, . Consider the frequency domain representation shown in Figure 1. The blue curve represents a signal which is called a bandlimited signal because it contains no energy at frequencies above a certain frequency, or bandwidth, .

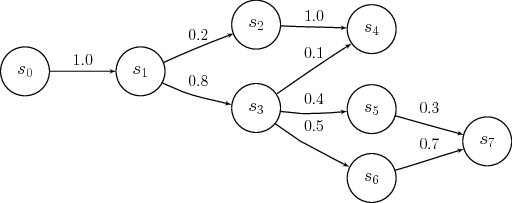

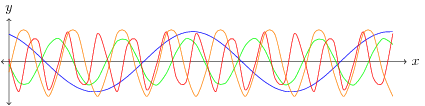

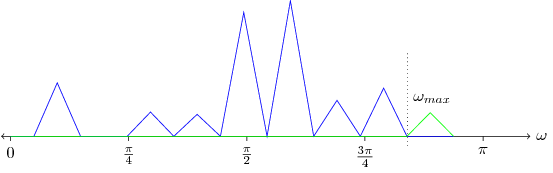

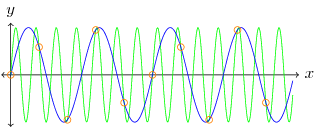

In order to sample this signal and prevent the loss of any information, the signal must be sampled at, or greater than, a rate twice the maximum bandwidth, . This is known as the Nyquist rate of the signal. If a signal is sampled at a frequency of , then any frequencies lower than may be aliased, that is, they may show up as artificial frequencies. This is known as the Nyquist frequency of the sampling system. Recall that , which produces a copy of the frequency response at every impulse, that is, at at spacing of in the frequency domain. When , then overlapping occurs between the copies of . The superposition principle tells us that the frequency response at these overlaps will be summed, introducing an artificial frequency response. Since the frequency domain representation is symmetric about the origin () the sampling frequency must be twice as large as in order to prevent any overlap. The green waveform in Figure 1 represents a frequency greater than that was aliased to a lower frequency. Figure 2 shows two sinusoids, if the signal is sampled at the orange points the two sinusoids are indistinguishable because the frequency of the green sinusoid is greater than the Nyquist rate.

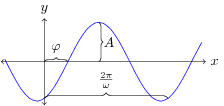

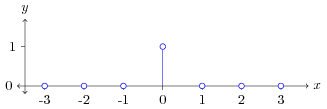

If the sampling is done at a rate greater than the Nyquist rate, then the no information is lost and the continuous-time signal can be fully reconstructed from the discrete-time signal. In order to reconstruct the continuous-time signal from the discrete-time signal we want to extract a copy of the frequency response and do an inverse Fourier transform. Remember that convolving with the impulse train created copies of the frequency response at each impulse. We only want one copy of the frequency response, so we want to multiply by a rectangular window with unit height on the interval and 0 everywhere else, referred to as the ideal reconstruction filter. This is equivalent to convolving the impulse response in the spatial domain with the spatial representation of the ideal reconstruction filter, referred to as the ideal sync, shown in Figure 3.