The term stochastic describes a system which has some element of randomness, and is therefore non-deterministic. A stochastic process is a process in which the transitions from one state to another may include some measure of uncertainty. Since there may be some uncertainty regarding state of the process at any given time it is natural to represent the state as a random variable. A stochastic process is modeled as a sequence of random variables representing the state indexed by a time variable, or .

A Markov chain is a type of stochastic process which exhibits the Markov property. That is, the future states of the process do not depend statistically on the past states. In terms of conditional probability (link):

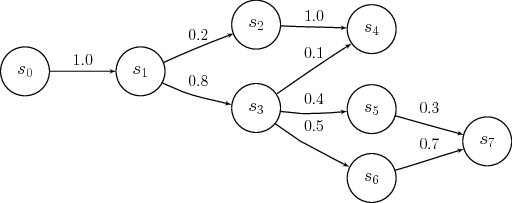

The probability of being in state at time is conditional only on the state at time and not on the previous states . Strictly speaking this is a first order Markov chain. The state at time of a chain of order, or memory, depends on the previous states. Figure 1 shows an example of a Markov chain.

We can define a variable to be the probability that the process transitions from state to state at time . This is called a single step transition probability. This idea can be extended to an n-step transition probability , that is, the probability that the process transitions from state to state in steps. The probability that the process in any particular state at time is given by the weighted sum of the transition probabilities for all states that the process could have been in at time . It can also be calculated as the sum of the -step transition probabilities starting at a time .

Since the probabilities of events in a sample space must sum to 1, we say that a vector who’s elements are non-negative real numbers and sum to 1 is a stochastic vector. Likewise a stochastic matrix may be defined depending on the meanings of the rows and columns. The usual meaning, and the one we will use here, is that each row of the matrix is a stochastic vector, called a right stochastic matrix. Given the transition probabilities from state to state it is natural to arrange these probabilities in a stochastic matrix. This matrix is called the transition matrix, , such that as defined above. The probability of transitioning from state to in steps is . For completeness the transition matrix , that is, when the transition matrix is the identity matrix.

The random variable representing the state of the process at time can be manipulated as a stochastic vector, where element is the probability that the process is in state . The initial state of the system can be given as a stochastic vector .

A state is said to be accessible from state , () if for some . If and then and are said to communicate (). A set of states which all communicate with each other and communicate with no other states forms an equivalence class called a communicating class. Furthermore, a communicating class can be closed if the probability of leaving the class is zero, . If the entire state space of a Markov chain is a communicating class than the chain is said to be irreducible. The graph of an irreducible Markov chain is a connected graph.

Cycles within the graph of the Markov chain lead to a notion of periodicity. A state has a period of if and is always a multiple of , that is, is the greatest common divisor of all possible . If then the state is called aperiodic. A Markov chain itself is said to be aperiodic if every state in the chain is aperiodic. If there is a non-zero probability of never returning to state from state then the state is said to be transient. A non-transient state is called recurrent or persistent.

In the special case that a Markov chain is both irreducible and aperiodic it is called ergodic, meaning that it converges to a single unique stationary distribution, called the equilibrium distribution, regardless of the initial distribution. A stationary distribution is a stochastic vector where each element satisfies the property: